This post aims to explain mathematically how populations change.

Our first attempt is based on ideas put forward by Thomas Malthus’ article “An Essay on the Principle of Population” published in 1798.

Let denotes total population at time

.

Assume in a small interval , births and deaths are proportional to

and

. i.e.

births =

deaths =

where are constants.

It follows that the change of total population during time interval is

where .

Dividing by and taking the limit as

, we have

which is

a first order differential equation.

Since (1) can be written as

,

integrate with respect to ; i.e.

leads to

where is the constant of integration.

If at , we have

and so

The result of our first attempt shows that the behavior of the population depends on the sign of constant . We have exponential growth if

, exponential decay if

and no change if

.

The world population has been on a upward trend ever since such data is collected (see “World Population by Year“)

Qualitatively, our model (2) with indicates this trend. However, it also predicts the world population would grow exponentially without limit. And that, is most unlikely to occur, since there are so many limitation factors to growth: lack of food, insufficient energy, overcrowding, disease and war.

Therefore, it is doubtful that model (1) is The One.

Our second attempt makes a modification to (1). It takes the limitation factors into consideration by replacing constant in (1) with a function

. Namely,

where and

are both positive constants.

Replace in (1) with (3),

Since is a monotonic decreasing function, it shows as population grows, the growth slows down due to the limitation factors.

Let ,

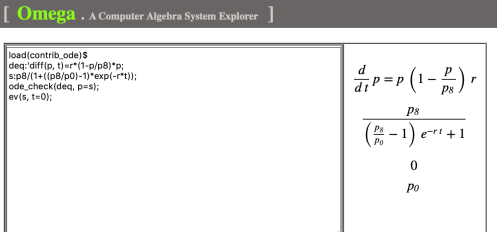

This is the Logistic Differential Equation.

Written differently as

,

the Logistic Differential Equation is also a Bernoulli’s equation (see “Meeting Mr. Bernoulli“)

Let’s understand (5) geometrically without solving it.

Two constant functions, or

are solutions of (5), since

and

.

Plot vs.

in Fig. 1, the points,

and

, are where the curve of

intersects the axis of

.

Fig. 1

At point where

, since

,

will decrease; i.e.,

moves left toward

.

Similarly, at point where

implies that

will increase and

moves right toward

.

The model equation can also tell the manner in which approaches

.

Let ,

As an equation with unknown ,

has three zeros:

and

.

Therefore,

if

,

if

and

if

.

Consequently , the solution of initial-value problem

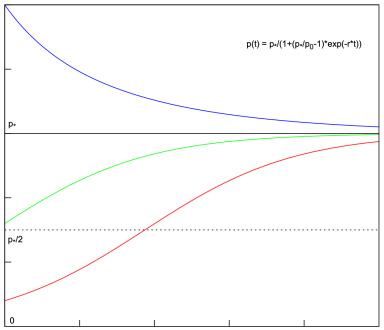

where behaves in the manner illustrated in Fig. 2.

Fig. 2

If approaches

on a concave curve. Otherwise, when

moves along a convex curve. For

, the curve is concave first. It turns convex after

reaches

.

Next, let’s solve the initial-value problem analytically for .

Instead of using the result from “Meeting Mr. Bernoulli“, we will start from scratch.

At where

, we re-write (5) as

.

Expressed in partial fraction,

.

Integrate it with respect to ,

gives

where is the constant of integration.

i.e.,

.

Since , we have

and so

.

Hence,

.

Solving for gives

We proceed to show that (7) expresses the value of , the solution to (6) where

, for all

‘s (see Fig.3)

Fig. 3

From (7), we have

.

It validates Fig. 1.

(7) also indicates that none of the curves in Fig. 2 touch horizontal line .

If this is not the case, then there exists at least one instance of where

; i.e.,

.

It follows that

Since (see “Two Peas in a Pod, Part 2“), it must be true that

.

But this contradicts the fact that (7) is the solution of the initial-value problem (6) where .

Reflected in Fig.1 is that and

will not become

. They only move ever closer to it.

Last, but not the least,

.

Hence the title of this post.